Docker overview

Docker was initially an internal project initiated by Solomon Hykes, the founder of dotCloud, a company based in France. It was an innovation built upon dotCloud's years of cloud service technology and was open-sourced in March 2013 under the Apache 2.0 license. The primary project code is maintained on GitHub, and the project has been renamed to Moby. The Docker project later joined the Linux Foundation and established the Open Container Initiative (OCI).

After being open-sourced, Docker gained widespread attention and discussion, with its GitHub project accumulating over 67,000 stars and more than 18,000 forks to date. Due to the popularity of the Docker project, dotCloud decided to rebrand as Docker in late 2013. Docker was initially developed and implemented on Ubuntu 12.04, while Red Hat began supporting Docker from RHEL 6.5, and Google extensively utilized Docker in its PaaS products.

Docker is developed using Go, a programming language introduced by Google, leveraging Linux kernel technologies such as cgroups, namespaces, and OverlayFS-type Union FS. It encapsulates and isolates processes at the operating system level, a form of operating system-level virtualization. Since the isolated processes are independent from the host and other isolated processes, they are referred to as containers. The initial implementation was based on LXC, but from version 0.7 onwards, LXC was removed, and Docker started using its self-developed libcontainer. From version 1.11, it further evolved to use runC and containerd.

What is Docker

Docker is an open platform for developing, shipping, and running applications. Docker enables you to separate your applications from your infrastructure so you can deliver software quickly. With Docker, you can manage your infrastructure in the same ways you manage your applications. By taking advantage of Docker's methodologies for shipping, testing, and deploying code, you can significantly reduce the delay between writing code and running it in production.

Why Use Docker

As an emerging virtualization method, Docker offers numerous advantages compared to traditional virtualization approaches.

More Efficient Resource Utilization Since containers do not require hardware virtualization or running a complete operating system, Docker utilizes system resources more efficiently. Whether it's application execution speed, memory consumption, or file storage speed, Docker outperforms traditional virtualization technologies. Consequently, compared to virtual machine technology, a host with the same configuration can often run more applications.

Faster Start-up Time Traditional virtual machine technology often takes minutes to start an application service, while Docker containers can achieve near-instantaneous start-up times, often within seconds or even milliseconds, as they run directly on the host kernel without the need for a complete operating system boot. This significantly reduces development, testing, and deployment time.

Consistent Runtime Environment A common issue during the development process is environment inconsistency. Due to differences between development, testing, and production environments, some bugs may go undetected during development. Docker images provide a complete runtime environment, except for the kernel, ensuring application runtime environment consistency and preventing the "but it works on my machine" problem.

Continuous Delivery and Deployment For developers and operations (DevOps) personnel, the ideal scenario is to create or configure once and have it run correctly anywhere.

Docker enables continuous integration, continuous delivery, and deployment by customizing application images. Developers can build images using Dockerfiles and integrate them with continuous integration systems for testing. Operations teams can then quickly deploy these images in production environments, even integrating with continuous delivery/deployment systems for automated deployments.

Using Dockerfiles makes image building transparent, allowing both development and operations teams to understand the application's runtime environment and deployment requirements, facilitating better image deployment in production.

Easier Migration Since Docker ensures runtime environment consistency, application migration becomes more straightforward. Docker can run on various platforms, including physical machines, virtual machines, public clouds, private clouds, and even laptops, with consistent results. Users can easily migrate applications running on one platform to another without worrying about runtime environment changes preventing the application from functioning correctly.

Easier Maintenance and Extensibility Docker's layered storage and image technology make it easier to reuse duplicated application components, simplifying application maintenance and updates. Extending images based on base images also becomes very simple. Additionally, the Docker team, along with various open-source project teams, maintains a large collection of high-quality official images that can be used directly in production environments or as a basis for further customization, significantly reducing the cost of creating application service images.

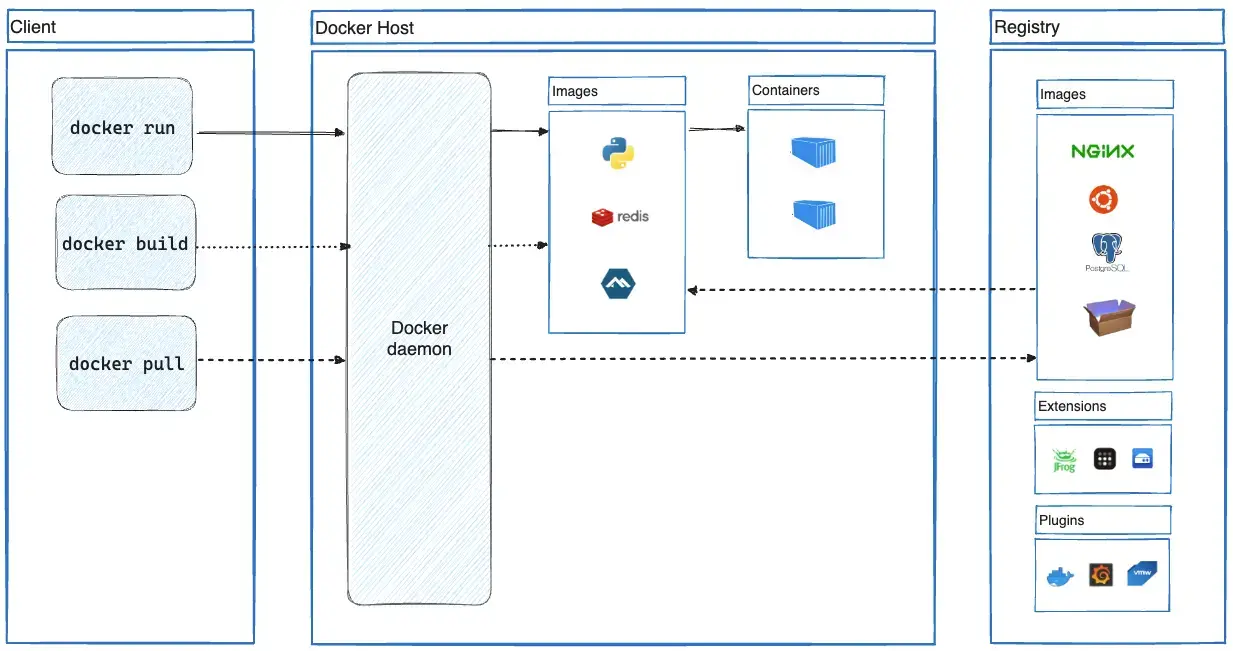

Docker architecture

Docker uses a client-server architecture. The Docker client communicates with the Docker daemon, which is responsible for building, running, and distributing your Docker containers. The Docker client and daemon can run on the same system, or you can connect a Docker client to a remote Docker daemon. The Docker client and daemon communicate using a REST API, over UNIX sockets or a network interface. Another Docker client is Docker Compose, which allows you to work with applications consisting of a set of containers.

The Docker daemon

The Docker daemon (dockerd) listens for Docker API requests and manages Docker

objects such as images, containers, networks, and volumes. A daemon can also

communicate with other daemons to manage Docker services.

The Docker client

The Docker client (docker) is the primary way that many Docker users interact

with Docker. When you use commands such as docker run, the client sends these

commands to dockerd, which carries them out. The docker command uses the

Docker API. The Docker client can communicate with more than one daemon.

Docker Desktop

Docker Desktop is an easy-to-install application for your Mac, Windows or Linux environment that enables you to build and share containerized applications and microservices. Docker Desktop includes the Docker daemon (dockerd), the Docker client (docker), Docker Compose, Docker Content Trust, Kubernetes, and Credential Helper. For more information, see Docker Desktop.

Docker registries

A Docker registry stores Docker images. Docker Hub is a public registry that anyone can use, and Docker looks for images on Docker Hub by default. You can even run your own private registry.

When you use the docker pull or docker run commands, Docker pulls the required images from your configured registry. When you use the docker push command, Docker pushes

your image to your configured registry.

Docker objects

When you use Docker, you are creating and using images, containers, networks, volumes, plugins, and other objects. This section is a brief overview of some of those objects.

Images

An image is a read-only template with instructions for creating a Docker

container. Often, an image is based on another image, with some additional

customization. For example, you may build an image which is based on the ubuntu

image, but installs the Apache web server and your application, as well as the

configuration details needed to make your application run.

You might create your own images or you might only use those created by others and published in a registry. To build your own image, you create a Dockerfile with a simple syntax for defining the steps needed to create the image and run it. Each instruction in a Dockerfile creates a layer in the image. When you change the Dockerfile and rebuild the image, only those layers which have changed are rebuilt. This is part of what makes images so lightweight, small, and fast, when compared to other virtualization technologies.

Containers

A container is a runnable instance of an image. You can create, start, stop, move, or delete a container using the Docker API or CLI. You can connect a container to one or more networks, attach storage to it, or even create a new image based on its current state.

By default, a container is relatively well isolated from other containers and its host machine. You can control how isolated a container's network, storage, or other underlying subsystems are from other containers or from the host machine.

A container is defined by its image as well as any configuration options you provide to it when you create or start it. When a container is removed, any changes to its state that aren't stored in persistent storage disappear.